Back in 2018, when I was considering different approaches for fixing the minor serialization issue in the project I had been working on, I encountered an article mentioning ES6 Proxy object for the first time (I know, I know...). After applying the freshly learned pattern and realizing that the problem I had to face is gone with just a few lines of code, even though I was happy that my solution works as expected and looks elegant, I also felt a little bit guilty that I had been simply skipping the Proxy section while reviewing ES2015 additions multiple times. Because of that shame, I felt obliged to gather more in-depth knowledge about that highly underestimated ES6 feature.

I started my research and I quickly realized that there are not many valuable sources describing the nature of proxies. Of course the whole idea that stands behind them is pretty straightforward, but the articles and blog posts trying to shed more light on it are often limited to basic (and almost always imaginary) scenarios which do not fully unleash its power. That was obviously not enough for me and - being pissed-off (again!) - I decided to start to dig deeper and fill that gap myself. That is when the idea of creating a new set of articles branded by my name popped out in my mind for the first time and now, after couple of months, I am more than happy to present you tripleequals.dev.

Back in secondary school

I guess the fact that the proxies were shipped together with ES6 is not a surprise for you (even if it was before, I mentioned it above). The initial draft, however, was created way earlier than you can suppose.

Remember the fuss around ES4? No worries, me neither. I was attending secondary school back then and I would lie to you if I said that Javascript with all of its dynamism was my main area of interests. As an ordinary nerd I started to fall in love with procedure programming offered by my school's poor curriculum, what was satisfying enough. Even if I had started exploring the web development world at that time, I would probably have lost interest quite quickly - the abstraction level of thinking required to take a leap between procedure and object-oriented (not to mention functional) programming is not typical for regular teenagers. Right then, in 2006, when I was leading a carefree life and spending every single moment of my spare time on the football pitch with friends, the father of Javascript Brendan Eich - along with ES community - discussed the catch-all feature proposal for EcmaScript 4.

The catch-all mechanism was intended to enable objects metaprogramming in ES-compliant scripting languages. More specifically, it allowed to set up specific client-defined traps that were going to be invoked anytime the engine executed fundamental operations (access, read, write - represented by the verbs: has, get, set; and similar) on the already existing objects.

__noSuchMethod__ property in the Object prototype to easily overwrite the default behavior after calling non-existent methods on an object. Such a metaprogramming operation can be easily implemented using currently standardized catch-all mechanism, that is why it has been marked as obsolete after proxies have been released in Gecko 43 and should not be used anymore.The catch-all feature eventually did not make it into ES4, neither in ES5. Nonetheless, ES5 introduced a few meta-programming concepts into the standard - both Object.defineProperty() and Object.create() allowed their clients to intersect the base and meta object layers to some extent. A property descriptor (passed to Object.defineProperty()) or map of such entities (when calling Object.create()) is represented by a following shape:

{

value, // represents the corresponding value of the property being added;

get, // a function (trap) that acts as a getter of the property (read access);

set, // similarly, a trap that will be executed whenever clients assign new

// value to the corresponding property (write access);

configurable, // boolean flag deciding whether the type of the property

// can be changed or the property may be deleted from an object;

enumerable, // decides if the property should appear when iterating over

// object properties with for-in, Object.keys() and similar;

// property will still be present in Object.getOwnProperties()

// though;

writable, // describes if the property may be changed via assignment operator.

}Based on the above we can change the default engine behavior for some of the basic operations on Javascript objects, which seems to be fine for at least some initial basic catch-all use cases.

Except not really.

Testing the water

Before I will elaborate more on the limitations of the static proxies implementation using ES5 features, let me quickly jump into year 2009. On December 7th that year, Tom Van Cutsem, who was working with Google team on Caya then, published a brief message on the EcmaScript mailing list:

Dear all,

Over the past few weeks, MarkM and myself have been working on a proposal for catch-alls for ES-Harmony based on proxies. I just uploaded a strawman proposal to the wiki:

http://wiki.ecmascript.org/doku.php?id=strawman:proxies

Any feedback is more than welcome. (...).

Cheers, Tom

It is worth to mention that both Van Cutsem and aforementioned Mark S. Miller had been previously working on the metaprogramming capabilities for less known programming languages (respectively: AmbientTalk and E) that they had been designing.

The originally posted resource is no longer valid, but thanks to the initiative of Internet Archive we can still read the more verbose information about proxies proposal. If you do not want to dedicate your time for reading the whole feature specification, I will just quote the most important part to give you a general idea about the main motivation of introducing that new mechanism:

To enable ES programmers to represent virtualized objects (proxies). In particular, to enable writing generic abstractions that can intercept property access on ES objects.

Sounds like a property descriptor fed into Object.defineProperty()? Not even close. The Google duo took a step at least three steps forward to make Javascript fully metaprogramming-compliant. The createProxy() method of the new built-in native Proxy object allowed developers to set not only the basic traps listed above, but a bunch of more powerful properties access handlers too:

{

getOwnPropertyDescriptor, // intercepts Object.getOwnPropertyDescriptor(proxy, name)

getPropertyDescriptor, // Object.getPropertyDescriptor(proxy, name)

getOwnPropertyNames, // Object.getOwnPropertyNames(proxy)

getPropertyNames, // Object.getPropertyNames(proxy)

defineProperty, // Object.defineProperty(proxy, name, pd)

delete, // delete proxy.name

fix, // Object.{freeze|seal|preventExtensions}(proxy)

has, // name in proxy

hasOwn, // proxy.hasOwnProperty(name)

keys, // Object.keys(proxy)

}Given the above you can override almost every crucial object access behavior with your own virtualized implementation. You may wonder what (if anything) is missing from that list. What can we add more? Or - from the opposite point of view - what we must not add in order to prevent any critical vulnerabilities? Van Custem, Miller and others contributing to proxies specification had asked themselves the same question and they did everything right in order to ensure security and prohibit user-level code to alter the core operations of the Javascript engine. They agreed on the list of invariants that should remain untouched:

- object identity (

proxy === obj) - long live triple equals! Object.getPrototypeOf(proxy)proxy instanceof Footypeof proxy

Object.setPrototypeOf() method to the standard. This functionality eventually became part of the EcmaScript 6, that is why current Proxy API allows to virtualize the Object.{get|set}PrototypeOf() calls on proxy objects.Additionally, in order to intercept two function-specific accessors, second helper has been defined. Target object passed to Proxy.createFunction() can accept a list of all user-defined traps presented before (functions are also objects in Javascript) as a first parameter and both call and construct handlers as two supplementary arguments.

Van Cutsem's message on first official catch-all implementation proposal was later followed by his presentation during Google Tech Talk and the paper he presented at Dynamic Languages Symposium in 2010.

Gotta Catch'em All

The initially defined proxy API does not differ a lot from its current counterpart. Of course you can immediately notice that instead of using static methods of the new native object (Proxy.create() and Proxy.createFunction()), nowadays we are instantiating it. Furthermore, both proxy creators have been condensed into one constructor (new Proxy) that is going to throw an exception whenever you try to set up an apply trap for a non-callable target (when typeof target !== "function"). These and some other minor adjustments, however, do not violate the core concept. When we intend to create a new virtualized object, we still have to reference two parameters - the target object (belonging to the base layer) and the property access handler (another object virtually located in the meta layer of the application).

Fair enough. Let's see how that magical "proxy mechanism" works in a simple use case. I am not going to write yet another implementation of the logging proxy or imaginary validation mechanism just to prove that the whole thing works as expected (if you cannot stand skipping these examples, you can easily find them online on your own). Instead, I will focus on an function optimization technique called memoization. It does not matter if you have first heard of it when you started playing with React hooks or if you had known it before - no specific knowledge and no particular implementation details are required to understand the following piece of code:

function createHandler() {

return {

previousArgs: undefined,

cachedResult: undefined,

// Assuming that target function is pure and does not depend on `this`

apply: function (target, thisArg, args) {

if (Array.isArray(this.previousArgs)

&& this.previousArgs.length === args.length

&& this.previousArgs.every((prevArg, i) => prevArg === args[i])) {

return this.cachedResult

}

const newResult = target.apply(thisArg, args)

this.cachedResult = newResult

this.previousArgs = args

return newResult

}

}

}

function withMemoFunc(target) {

const handler = createHandler()

return new Proxy(target, handler)

}For type-safe version of the above example click here.

For those who got lost somewhere in the middle of the conditional statement: this code represents instructions for creating a proxied versions of pure functions that remembers the previous set of arguments that have been passed during its execution. Before reaching the target function itself it takes a "detour" - when the collection of parameters do not change within subsequent calls, instead of recomputing the result over and over again, it will simply return the cached result. What needs to be underlined is that the memoized function does not create a complex cache map or anything similar (the space complexity of the handler closure is constant) - for the sake of this article remembering just the previous parameters list is perfectly fine.

Such a mechanism should be dedicated for functions that execute time-consuming operations. It does not make much sense to add a memoization overhead for simple computations. Let me represent that heavy computation with the following example:

const timeConsumingAdd = (a, b) => {

let startDate = new Date()

let currentDate

do {

currentDate = new Date()

} while (currentDate - startDate < 2000)

return a + b

}The time-consuming operation is simulated by a dummy loop waiting 2000ms before returning the sum of the two function parameters. To prove that it works, let's profile the function call with the help of the underestimated console.time() API:

console.time("add")

console.log(timeConsumingAdd(10, 20))

console.timeEnd("add")

// 30

// add: 2000.229248046875msRight. Now is the time to optimize our timeConsumingAdd():

const memoizedAdd = withMemoFunc(timeConsumingAdd)And quick profiling again:

console.time("first add")

console.log(memoizedAdd(15, 49))

console.timeEnd("first add")

// 64

// first add: 2000.05712890625ms

console.time("subsequent add")

console.log(memoizedAdd(15, 49))

console.timeEnd("subsequent add")

// 64

// subsequent add: 0.12939453125msSo here it is.

Despite being represented by Proxy object, memoizedAdd() acts like a regular Javascript function (typeof memoizedAdd === "function"). To be more specific - each entity containing additional meta layer acts as a regular Object, so we should not worry about any extra steps to avoid invalid type recognition.

(Not that) good, old times

I am fully aware that the example of withMemo() did not fully unleash the power of metaprogramming with proxies. That was sort of intentional. Eventually we might not need meta layer at all to implement memoization helper for our pure functions. Now I am going to ask you to look at the catch-all mechanism from a wider perspective. In one of the previous sections I settled up an indirect promise to write more about ES5 limitations and now is the time to fulfill that promise. Time to start the time machine again and travel back to pre-ES6 era.

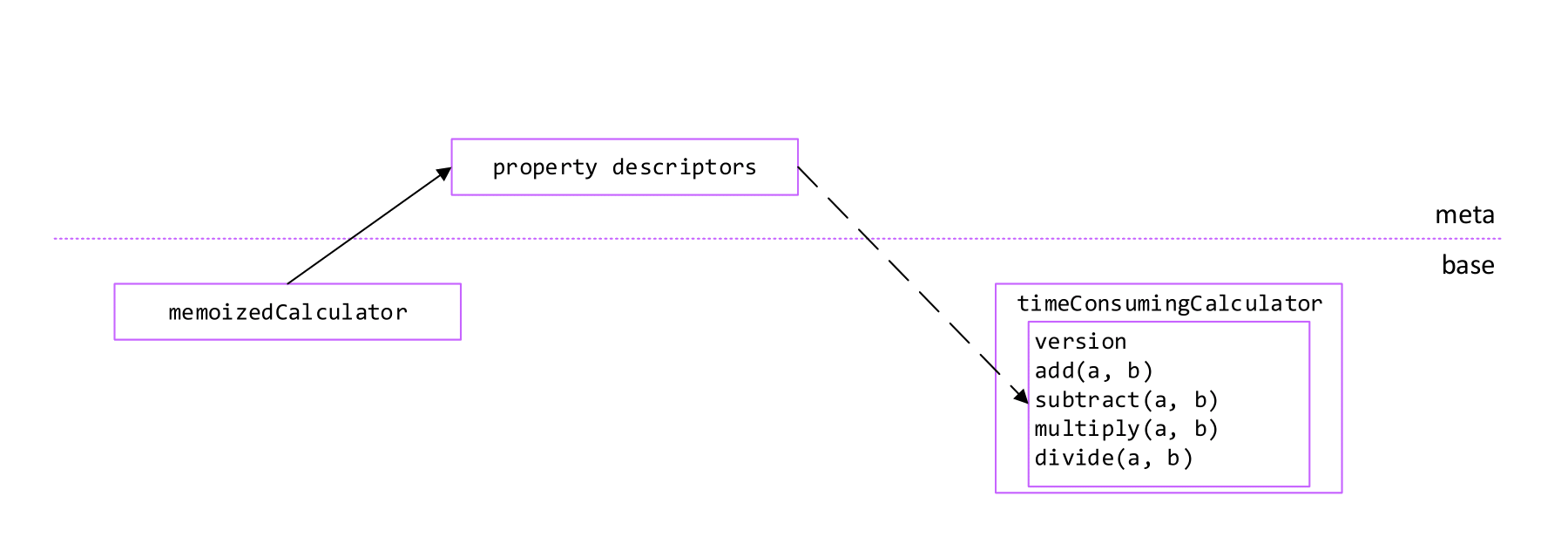

What if we have a service with a bunch of methods, each of doing heavy computations? Extracting them and creating a separate memoized version of each seems like "the triumph of form over substance". Equipped with Object.create() and Object.defineProperty() we can be (or at least act) smarter. Excuse me for vars - rules are rules, ES5 literally means ES5:

function withMemoEs5(targetObj) {

var proxy = Object.create(Object.getPrototypeOf(targetObj), {})

Object.getOwnPropertyNames(targetObj).forEach(function (name) {

var propertyDescriptor = Object.getOwnPropertyDescriptor(targetObj, name)

if (typeof targetObj[name] === "function") {

var previousArgs

var cachedResult

Object.defineProperty(proxy, name, {

// Assuming that target function is pure and does not depend on `this`

value: function value() {

var args = Array.prototype.slice.call(arguments)

if (Array.isArray(previousArgs)

&& previousArgs.length === args.length

&& previousArgs.every(function (prevArg, i) { return prevArg === args[i] })) {

return cachedResult

}

var newResult = targetObj[name].apply(this, args)

cachedResult = newResult

previousArgs = args

return newResult

},

configurable: propertyDescriptor.configurable,

enumerable: propertyDescriptor.enumerable,

})

} else {

Object.defineProperty(proxy, name, propertyDescriptor)

}

})

return proxy

}The service to be optimized is defined as:

var timeConsumingCalculator = {

version: 1,

add: function add(a, b) {

heavyComputation()

return a + b

},

subtract: function subtract(a, b) {

heavyComputation()

return a - b

},

multiply: function multiply(a, b) {

heavyComputation()

return a * b

},

divide: function divide(a, b) {

heavyComputation()

return a / b

},

}All right. Time to make use of the static proxy creator (withMemoEs5()) and create a calculator with operations that now are going to be memoized:

var memoizedCalculator = withMemoEs5(timeConsumingCalculator)Let's verify the memoization mechanism using console.time():

console.time("first add")

console.log(memoizedCalculator.add(11, 3))

console.timeEnd("first add")

// 14

// first add: 1999.701171875ms

console.time("subsequent add")

console.log(memoizedCalculator.add(11, 3))

console.timeEnd("subsequent add")

// 14

// subsequent add: 0.0546875ms

console.time("first subtract")

console.log(memoizedCalculator.subtract(11, 3))

console.timeEnd("first subtract")

// 8

// first divide: 1999.552978515625ms

console.time("subsequent subtract")

console.log(memoizedCalculator.subtract(11, 3))

console.timeEnd("subsequent subtract")

// 8

// subsequent divide: 0.013671875msFull ES5 static proxy implementation available on Github.

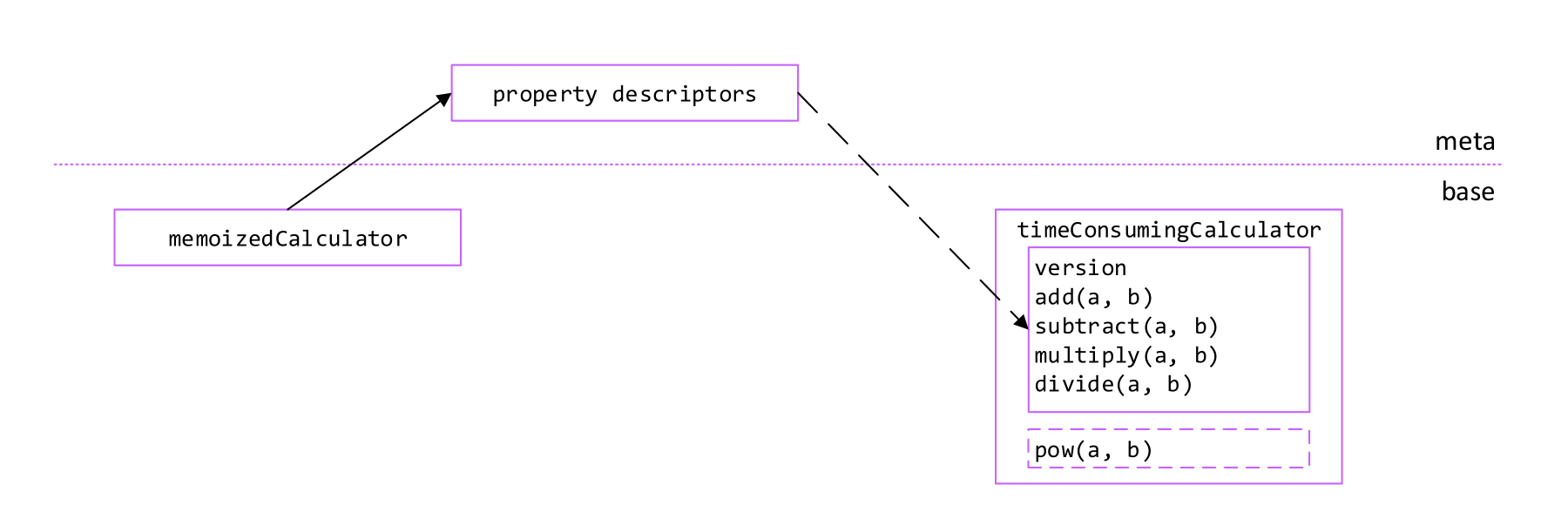

Looks good? Only at first sight. The memoization is working as expected, but for the methods already defined within timeConsumingCalculator service. What if we dynamically (in the application runtime) add the new pow() method to the timeConsumingCalculator object?

timeConsumingCalculator.pow = function (a, b) {

heavyComputation()

return Math.pow(a, b)

}

Even though memoizedCalculator has some logic hidden within the meta layer, it is far away from being perfect. Structural changes (like adding new methods) to the target object will not be reflected in its shape. The linkage between proxy object and target object is weak - that is why we cannot say that ES5 standard is fully compliant with metaprogramming principles and that is why I called the object returned by withMemoEs5() function a static proxy.

Back to the future

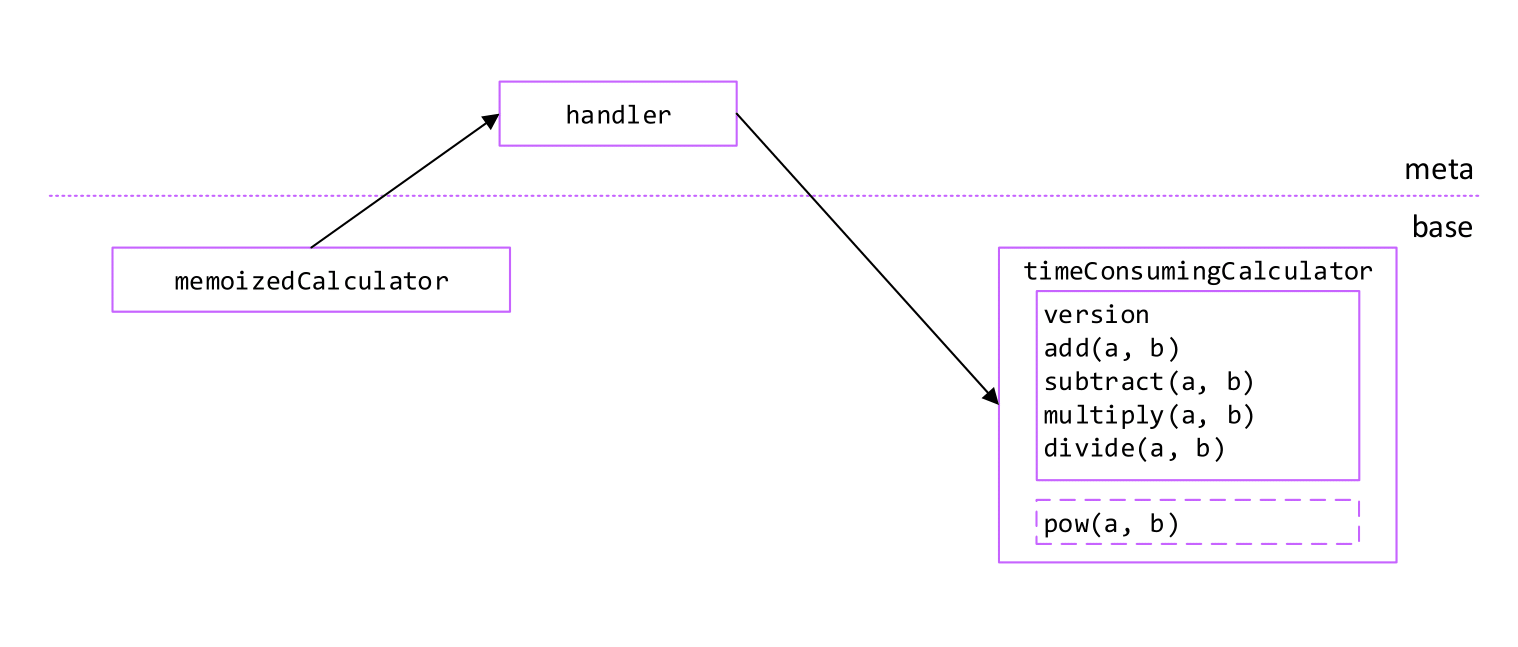

ES6 proxy addresses this problem. It tightly couples the handler in meta layer with the target object. Such binding allows to intercept not only structural changes to the proxied entity, but also modifications done to property descriptors. If you immediately thought about observer pattern, you got the idea right. In fact, Object.observe() implementation (which - in contrary to proxies - worked asynchronously) that existed in V8 few years ago has been withdrawn due to proxies release. Time to see the dynamic proxy in action.

function createHandler() {

return {

cache: new Map(),

get: function (target, prop) {

const { cache } = this

if (typeof target[prop] === "function") {

// Assuming that target function is pure and does not depend on `this`

return function (...args) {

const memoizedData = cache.get(prop)

if (memoizedData && memoizedData.previousArgs.length === args.length

&& memoizedData.previousArgs.every((prevArg, i) => prevArg === args[i])) {

return memoizedData.cachedResult

}

const newResult = target[prop].apply(this, args)

cache.set(prop, { cachedResult: newResult, previousArgs: args })

return newResult

}

} else {

return target[prop]

}

}

}

}

function withMemo(target) {

const handler = createHandler()

return new Proxy(target, handler)

}Ladies and gentlemen (drums!), let me show you the magic:

const memoizedCalculator = withMemo(timeConsumingCalculator)

// Dynamic extension of target object (proxy untouched)

timeConsumingCalculator.pow = (a, b) => {

heavyComputation()

return a ** b

}

console.time("first pow")

console.log(memoizedCalculator.pow(2, 5))

console.timeEnd("first pow")

// 32

// first pow: 1999.9169921875ms

console.time("subsequent pow")

console.log(memoizedCalculator.pow(2, 5))

console.timeEnd("subsequent pow")

// 32

// subsequent pow: 0.255126953125msVoilà. Is that what Eich meant in 2006? Affirmative. The target object is now strongly bound to the handler object belonging to the meta layer. It does not matter if we decide to alter the target object structure in the runtime - proxy will still act as a real proxy, redirecting the property accesses to client-defined detours.

Biology class

The list of simple use cases where ES6 proxies may come in handy is huge: profiling, mocking, validation, caching, access control, implementing observer pattern and so on. Whereas utilizing catch-all mechanism while facing these not-so-challenging scenarios is completely fine, the power of proxy is invaluable when dealing with more complex patterns. Wider perspective, remember?

Is there anything that metaprogramming may have in common with biology? I highly doubt it. There is one concept though that shares the same terminology with biological cells. That concept is called a membrane. We can treat the membrane as a proxy wrapping an entire object graph. Object graphs may contain circular references - if one of the objects referenced by an entity within object graph contains additional meta layer, we must make sure that the other references to that object will be updated as well. Imagine the simple circular doubly linked list with nodes define as below:

class Node {

prev: Node

next: Node

}If we want to add custom meta abstraction to our list, wrapping just the head of the list is not enough. Assuming that the list contains at least two elements, we will end up then with the following:

console.log(head === head.next.prev) // true

const proxiedHead = new Proxy(head, handler)

console.log(proxiedHead === proxiedHead.next.prev) // falseThe reference held by proxiedHead.next.prev still points to the base target object, what breaks the membrane isolation. Instead of reinventing the wheel and implementing the membrane on my own, I will just leave the link to an article written by... Tom Van Cutsem, who elaborates more on the membrane pattern and gives some advices on their implementation (long story short: proxies and another ES2015 feature - WeakMaps).

Given that the proxy API offers us yet another method of wrapping objects with the meta layer (Proxy.revocable()), an access to the membrane can be easily revoked, what makes the whole proxied object graph secure.

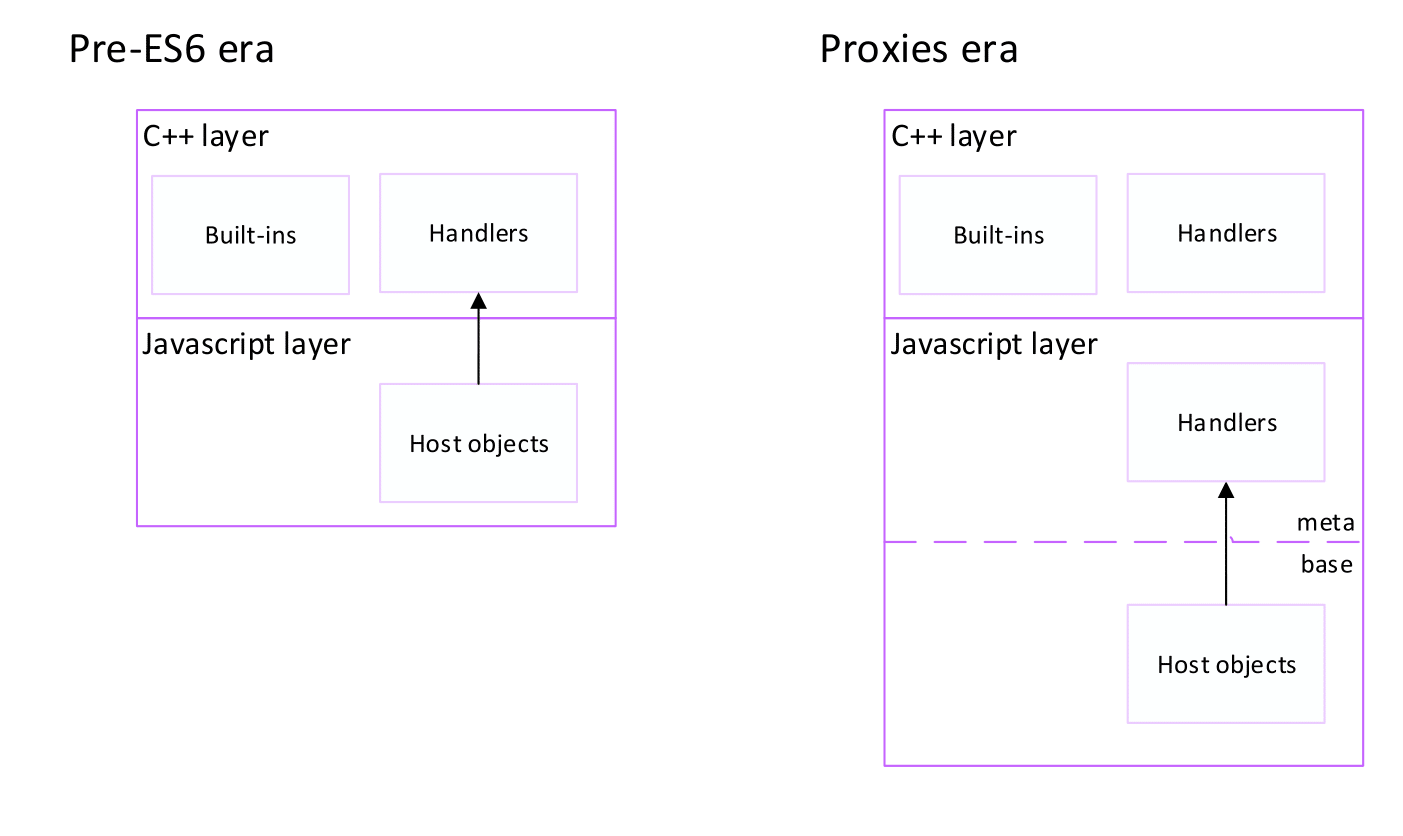

Those secure structures may be used for various scenarios - e.g. to allow web browsers developers to create host objects inside the Javascript layer of their engines. Before ES6 most of the host objects must have been defined in the lower-level layer of the browser environment, most likely written in C++. That required developers to be extremely careful about both security and performance - each interaction with host object probably resulted in a few jumps between Javascript and C++ runtimes.

Now the need of additional C++ overhead is gone and host objects can be self-hosted.

End of the journey

Wow. That was a really long journey. Hopefully you get the general idea about Javascript metaprogramming and appreciate the effort put by Ecma Commitee to bring it to life with ES6 release. As you may have noticed, proxies are a highly underestimated yet very powerful tool that may serve various purposes and can help with overcoming everyday programming obstacles. Obviously we should not forget that proxied objects come with a small performance overhead (V8 team has already refactored the code behind that feature to make it more efficient) - but in most cases the impact will be marginal.

Please feel encouraged to explore the metaprogramming world even more - I found the resources linked in this article very revealing so if you have some spare time, I highly recommend to read them and discover the whole domain on your own. Do not ignore the yet another EcmaScript 6 deliverance - static Reflect built-in. Even though that stuff seems to be boring at first glance, it really helps to sharpen our vision and makes us - ordinary programmers - better developers.